In the ever-evolving landscape of artificial intelligence, Explanatory AI stands out as a beacon of transparency and trust. But what exactly is Explanatory AI, and how can UI/UX designers contribute to its realization? Let’s delve into this fascinating intersection of technology and design.

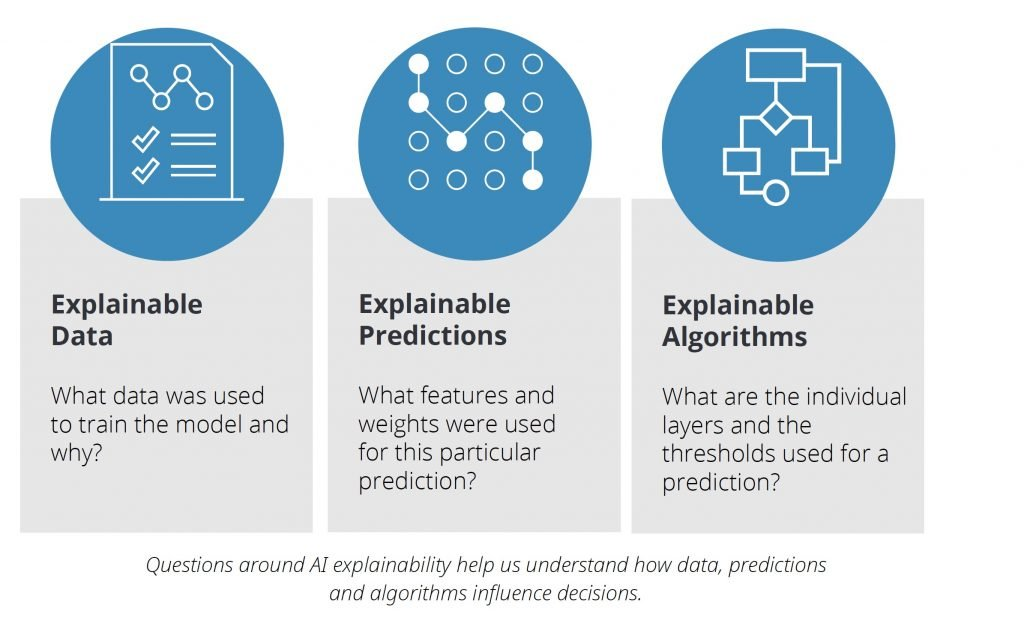

Explanatory AI, as the name suggests, is about providing explanations or justifications for the decisions and actions of AI systems. This is crucial in contexts where understanding the reasoning behind AI decisions is paramount for trust, transparency, and accountability. But how does it work?

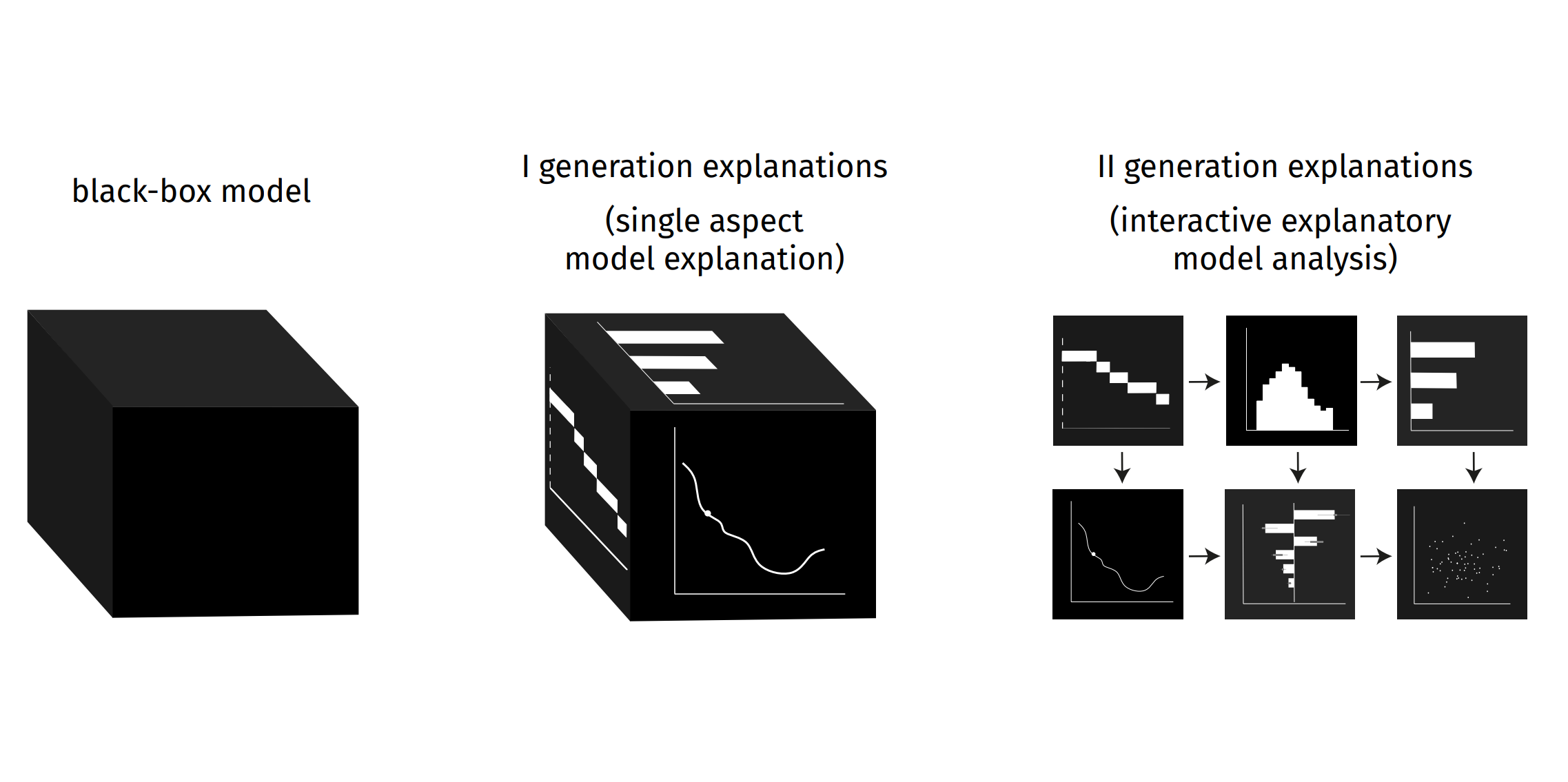

Explanatory AI employs various techniques to shed light on the decision-making process of AI models. These include identifying influential features, providing explanations for individual predictions, offering insights into overall model operations, and expressing decision-making logic in human-understandable terms. The goal is to move away from the “black box” perception of AI towards a more transparent and interpretable framework.

Our Role as Designers

So, where do UI/UX designers come into the picture? Their expertise in crafting user interfaces and experiences can significantly enhance the effectiveness of Explanatory AI systems. Here’s how:

Designing Explanation Interfaces: UI/UX designers can create interfaces that present AI explanations in a clear, intuitive, and visually appealing manner. By carefully designing layouts, typography, and visual elements, they can effectively communicate complex information about AI decisions to users.

User Research: Conducting user research is key to understanding the needs, preferences, and comprehension levels of the target audience regarding AI explanations. This valuable insight informs the design of explanation interfaces tailored to users’ cognitive abilities and information-processing preferences.

Interactive Explanations: Designers can explore interactive techniques like tooltips, visualizations, or guided tours to allow users to explore AI explanations at their own pace and depth. This interactive approach enhances user engagement and comprehension.

Feedback Mechanisms: Implementing feedback mechanisms enables users to provide input on the usefulness, clarity, and comprehensibility of AI explanations. This feedback loop empowers designers to iterate and improve explanation interfaces over time, ensuring they meet users’ evolving needs.

While UI/UX designers may not need to learn entirely new skills, gaining familiarity with AI interpretability concepts and human-centered design principles for explanation interfaces can be beneficial. Collaboration with AI researchers, data scientists, and domain experts further enriches designers’ understanding and enables them to design effective Explanatory AI systems.

Explanatory AI holds immense promise for fostering trust and transparency in AI systems. With the creative prowess of UI/UX designers, we can unlock its full potential and pave the way for a future where AI decisions are not just understood but embraced with confidence. Let’s embark on this journey together, bridging the gap between technology and humanity, one explanation at a time.

Demystifying Explanatory AI: UI/UX Designers Can Make it Happen

Demystifying Explanatory AI: UI/UX Designers Can Make it Happen